Gentle reader,

If you did not read last Wednesday’s piece on the Humanities and AI, you may find it here. Once again, I welcome your comments and ideas regarding this massive challenge for the future of education.

Yours,

John

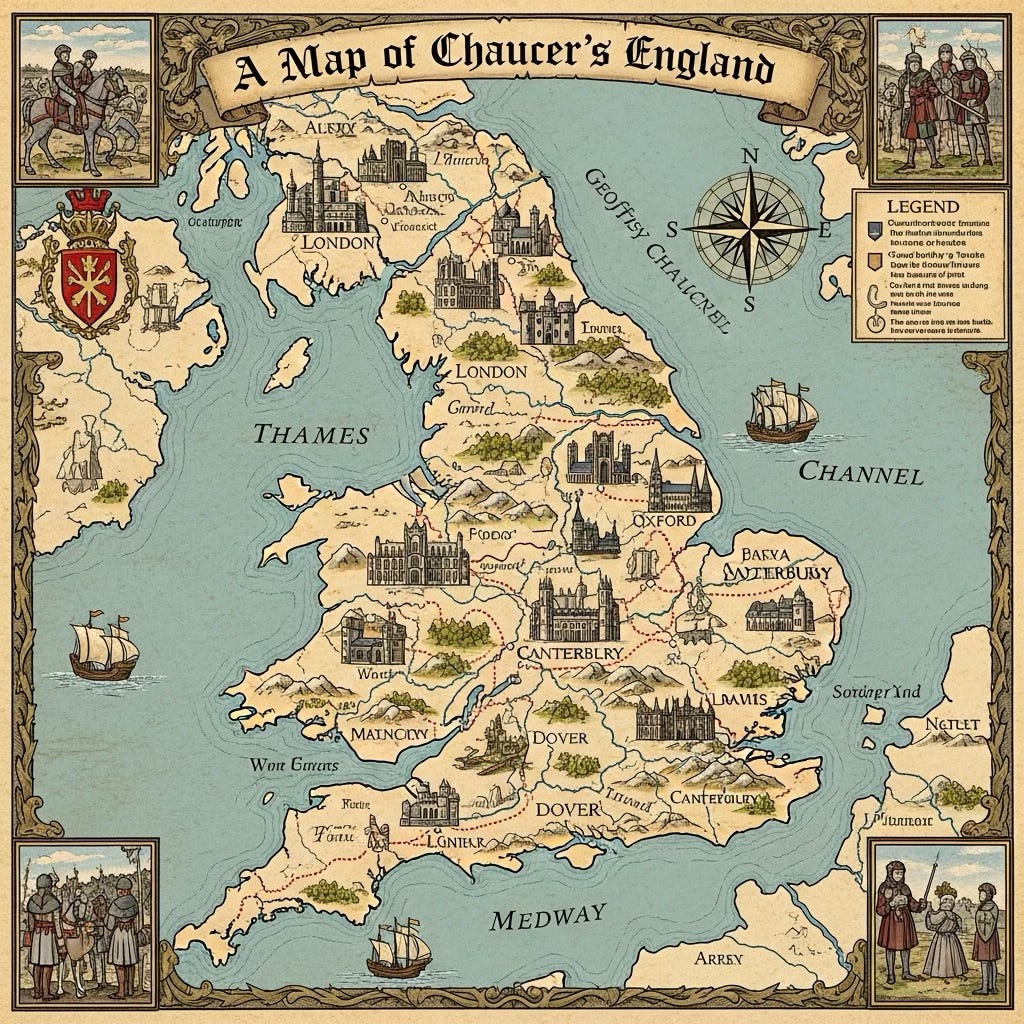

Last week’s installment generated a good deal of interesting discussion, which I will get to shortly. First, I want to provide some evidence that AI is not good at everything and that students cannot rely on it in all contexts. I asked Gemini (Google’s AI product) to draw me a map of Chaucer’s England, and here is what it came up with:

Where to start? In addition to there being two Londons and two Dovers, all in the wrong places (one London in Scotland!), there are also two Canterblrys [sic.], which may make us think of Chaucer’s masterpiece, The Canterblry Tales. The Thames has replaced the Irish Sea, and the North Sea is now, apparently, called the “Geoffisy Chaucnel.” There are, indeed, nonsensical names all over the map. And in what language was the “legend” written? Geminese? What are those people in the corners of the map meant to be doing? Oh, and check out the compass.

So, needless to say, a student looking to cheat on an historical geography exam should look elsewhere. But, of course, this is not what most of us are worried about: these LLMs seem to be much better at language than at cartography.

So, what should we do in the humanities?

Your responses last week were all fascinating and ranged from the dystopian to the (moderately) optimistic to the philosophical.

, who writes , wrote:The problem is that for teaching and learning to have integrity, everyone has to bring their best effort. AI is cheating, and everyone knows it. There are no clear standards, no reliable methods of detection as there has been for plagiarism in the past, and so the notion that an instructor assigns reading and designs writing assignments that a student works through on their own is now patently absurd. Students use AI to "read" (giving them pithy summaries) and to "write."

It’s hard to argue with this, though there are some potential approaches that can acknowledge this reality.

, who writes , considered some potential uses of the technology in the classroom:suggested renewed emphasis on aesthetic approaches and in-class discussion and debate might help. And Substack’s design guru wrote a full-scale, caffeinated allegory of Miltonic dimensions about the issue, and concluded, regarding LLMs:Although if I was teaching a classic book I might use AI to delve into source material of the time. I’m rereading Bleak House and it would be interesting to read about the Court of Chancery in that era and whether there were similar cases to Jarndyce and Jarndyce. Contemporary newspaper articles. Real life people who Dickens might have used as models. That might be a good use of AI for literature.

I think they'll be good for academia. They kill the illusion that posing as informed is worth a damn. They chase learning, knowing, communicating back into the interpersonal sphere. By embodying scale in their training, they render scale impossible: teachers may need to know students as individuals again; students may need to know teachers as individuals again; the dynamics of mentorship may return to a scene too long pushed towards impersonal, explicit, large-system nonsense. Who knows? Not me, not the Devil! But I really think this latest Satanic gambit is faltering right now, as we speak, and we should all be delighted that in a year or two, absolutely no one will think we're "on the cusp" of "AGI" or "superintelligence," or even likely to displace the simplest human being from whatever perch they occupy.

The map above may be hopeful evidence for this perspective. Also, considering the massive backlash against the recent release of ChatGPT-5, with its continued hallucinations and unimpressive improvements (considering the pre-release hype), there is some evidence that what the tech industry has given us is a giant bullshitting machine, albeit a bullshitting machine with some uses.

Of course, on the other side of the argument is the “Godfather of AI” (and Nobel Prize winner) Geoffrey Hinton, who predicts that artificial super-intelligence is only a decade or so away, at which point he posits that it will have an intelligence advantage over us that is similar to the advantage that we enjoy over our dogs.

But in the short term what we have is a technology that makes it easier for students to give up reading, writing, and thinking altogether, at which point we may (justifiably) ask, what is the point of our teaching at all?

Now, at this point I’m going to pause in my drafting and ask Gemini what it thinks of the issue. I have cut and pasted the piece so far (except for that bit about the map) into the chatbot, and here is its response. The Substack interface wouldn’t let me put this in a block quote, so I’ll place it between two page dividers:

Gemini’s response:

This is a great question that gets to the heart of the challenges and opportunities presented by large language models (LLMs) in education, particularly in the humanities. Rather than a single solution, the most effective approach likely involves a combination of strategies that leverage the new technology while reinforcing the core goals of a humanities education.

Reorienting Educational Goals

The rise of LLMs forces a re-evaluation of what we want students to learn. If these tools can handle the mechanics of writing and information synthesis, the focus should shift to the higher-order skills that AIs can't replicate.

Emphasize Human-Centric Skills: A humanities education has always been about more than just knowledge recall. The goal is to develop critical thinking, ethical reasoning, creativity, and effective communication. We can emphasize these skills by designing assignments that require students to connect course material to their own lives, to engage in nuanced debate, or to produce something genuinely original. The emphasis should be on the process of thinking and creating, not just the final product.

Focus on In-Person Interaction: As Mills Baker suggests, LLMs may push us to reclaim the value of in-person, interpersonal engagement. This means more class discussions, oral presentations, and group projects. These activities make it difficult to rely on an AI for a meaningful contribution. This shift also encourages students to develop their public speaking and collaboration skills.

Move Beyond the Essay: The traditional research paper or essay is the most susceptible to AI misuse. While we shouldn't abandon it entirely, we can supplement it with alternative forms of assessment. This could include portfolios of work that show a student’s progress over time, creative projects, or the creation of multimedia content. For example, instead of a paper on a novel, a student could create a short film or podcast that analyzes its themes.

<br> <hr> <br>

New Approaches to Assignments and Assessment

We can adapt our assignments to make the use of an LLM less helpful or even counterproductive, thereby changing the context of the work.

Incorporate LLMs into the Assignments: Instead of treating LLMs as a form of cheating, we can integrate them into the curriculum. For instance, an assignment might ask students to use an AI to generate a paper on a topic and then require them to critically evaluate, correct, and rewrite the AI's output. This teaches students about the technology’s limitations, such as its tendency to "hallucinate" or provide biased information, while also strengthening their editing and critical analysis skills.

Personalize Assignments: When assignments require students to use their unique experiences, opinions, or personal voice, AI output becomes generic and easy to spot. For example, asking students to reflect on a reading in light of a personal anecdote or to write a letter to a historical figure from their own perspective makes the work uniquely their own.

Shift the Focus to Source Analysis: As David Roberts suggests, we can design assignments that focus on the process of research. For instance, students could be asked to use LLMs as a starting point to find relevant primary sources—such as historical documents, newspaper articles, or personal letters—and then to write an analysis based solely on those sources, not on the AI’s summary. This trains them to become skilled researchers.

<br> <hr> <br>

Embracing the Inevitable

Rather than fighting this new technology, we can see it as an opportunity to return to the core principles of the humanities. The skills of close reading, critical thinking, and nuanced communication are more valuable than ever in a world flooded with AI-generated information. By focusing on these timeless skills and adapting our teaching methods, we can help students navigate a changing world and ultimately find a greater purpose in their education. The challenge is not to "beat" the AI, but to teach students to think and create in ways that AI simply can't.

OK, that was Gemini. Now, here is John again:

Not bad. I give it a solid B+. I think that what Gemini shows us here is that AI is really good at figuring out what we want to hear from it. Notice how it uses the pronoun “we” and cites the commenters generously. However, the final heading is somewhat jarring: “Embracing the Inevitable.” Despite the borderline sycophantic courting of humanists here, the message is ultimately:

Get ready, puny teachers, because your tech overlords have decided what’s best for us, and there is nothing that you can do about it. And if you try to fight, we will simply replace you. Retreat into your little poetry silo, and get out of our way.

As the poet Robert Pinsky once said to me during a campus visit at Tulane: “The sycophant is always the assassin.”

So, here is what I am doing this semester, which is not incompatible with some of our commenters’ suggestions or (gasp!) Gemini’s suggestions. I am deploying a two-pronged attack (sorry for the military metaphor; I can’t help it in this context):

In the British Literature survey course, which is mostly non-English-majors, there will be a zero-tolerance policy for AI. All writing will be done in class, with pen and paper, and in supervised group work, with no devices allowed. Since it’s essentially a course on reading (just as English Composition is a course on writing), that will be our focus. And they won’t be able to rely on summaries, because their work will be responding to specific passages from the texts that we are studying. We will also be doing a lot of in-class discussion, again, focusing on particular passages in the context of the work as a whole. Students will also listen to this interview on “deep reading” with Maryanne Wolf:

In the Literary Criticism and Theory course, which is entirely made up of English majors, we will address these issues head-on. We will begin the semester by discussing N. Katherine Hayles’ “How We Read: Close, Hyper, Machine,” followed by some Platonic dialogues. These dialogues will serve as models, because for the rest of the semester we will be producing written dialogues of our own as we embark upon “The Socrates Project.”

This is an experiment, and I don’t know how it’s going to go, which, from what I understand as a non-STEM person, is how experiments work.

Here is the assignment that my students will be working on this semester:

The Socrates Project

Due Date: Weekly, via Google Drive

Objective: The Socrates Project is a weekly assignment that asks you to engage with our course readings in a conversational format. The goal is to think through complex ideas by "talking" about them, either with a peer or with an AI partner. This project will serve as a foundation for our in-class discussions and will help you develop your ability to articulate and defend your ideas.

The Assignment: Each week, you will produce a Socratic dialogue based on that week's assigned readings. You will alternate between working with a human partner and working with an AI.

Human Dialogue: For these weeks, you will work with one of your peers. Your dialogue should be a written conversation between the two of you, in which you work through a question or a problem raised by the week's readings.

AI Dialogue: For these weeks, you will use a generative AI model to create a dialogue. In class, we will collaboratively develop prompts to guide the AI, and you will use those prompts to produce a written conversation with the AI.

Weekly Process:

Reading: Complete the assigned readings for the week.

Prompting: In class, we will discuss the readings and collectively develop a question or a set of prompts to guide our dialogues for the week.

Dialogue: You will write your dialogue, either with your human partner or with the AI. The dialogue should be a minimum of 1000 words and should be submitted as a single document. It is not about reaching a final answer, but about exploring the question from multiple angles.

Submission: Upload your completed dialogue to our shared Google Drive folder by day that we discuss your assigned reading.

What is a "Socratic Dialogue"? A Socratic dialogue is a form of inquiry where two or more people explore a complex idea by asking and answering questions, based on the fourth-century-BCE Socratic dialogues by Plato. It's a conversation focused on questioning assumptions, testing ideas, and seeking a deeper understanding. The dialogue should not be a report on the readings but a genuine intellectual exploration of them. We will be reading a couple of Plato's Socratic dialogues at the beginning of the semester, and these will serve as models.

Using the Dialogues: The dialogues we produce will serve a dual purpose. First, they will stimulate our in-class discussions, serving as a starting point for our collective intellectual work. Second, over the course of the semester, we will use them to contemplate the differences between human dialogue and dialogue with AI. This will allow us to consider fundamental questions about the nature of thought, creativity, and conversation in the digital age.

Final Project: During the final week of class, we will spend our time collating and discussing all of the dialogues produced over the semester. We will collectively produce a report that summarizes our findings and reflections on the differences we observed. This report will then be shared at the ILC's Conference on Teaching and Learning in May.

Remember: This project is not about having the "right" answers. It is about the process of questioning, exploring, and collaborating. The dialogues you produce will be the fuel for our class discussions.

Students will also have to write two major papers during the semester, for which they may not use AI, though they may cite the dialogues that we have produced. Of course, the idea that they will not use AI for these assignments will be based on the mutual trust that we will, hopefully, build during the course of the semester.

So, what do you think. Does this have legs? I look forward to your comments.

Thanks for reading, from my fancy internet machine to yours.

Speaking as a non-academic, I think I can say that those of you on the front lines of the Humanities are the true heroes of our era! You have the opportunity to reshape education and make the Humanities the centerpiece of our culture. I admire your approach of experimenting and sharing feedback on how different strategies might work.

I'm sure this increases the teaching burden with zero additional compensation, but if it's any consolation, I have found the Humanities the most important and long-lasting part of my education. My only regret is that I never reached out to thank all those responsible.

Gemini made me part of the nefarious "we!"

Recent ChatGPT "mishap." My wife and I dozed too much during the first episode of Unforgotten, Season 5. We started episode two and realized we had no idea who was who. So I asked GPT for a summary of the first episode. It confused us more because it was just wrong about who and where the characters were. It was annoying, then funny how wrong it was. Similar to its map attempt in your post.

I do think it's good for research. But mostly to go a level deeper. I needed to know cutting-edge nail polish colors in 2012 (a novel set then) and for that it was great. I could then go to google and click through to ads for the color I chose (for the name––Malice Red)

And I still think that humanities classrooms should have "dumb" devices that just allow a student to type out an essay. I pity the teacher who'd have to decipher my handwriting. Or I pity myself for having to write in block letters so slowly to make myself legible that I wouldn't be able to complete my essay.